Building a courtroom card game with Cursor custom modes and AI

Ever get that itch to build something new, even if you’re not sure you know what you’re doing? That was me at Clio’s latest hackathon. The only rule: use AI tools to make… well, anything.

I’ve poked around in Unity before. I built a Flappy Bird clone I was actually pretty proud of. But what I really enjoy are card games. Magic: The Gathering, Gwent, Balatro, Wingspan, Slay the Spire. If it’s got cards and combos, I’m in. Designing one, though? Never tried it.

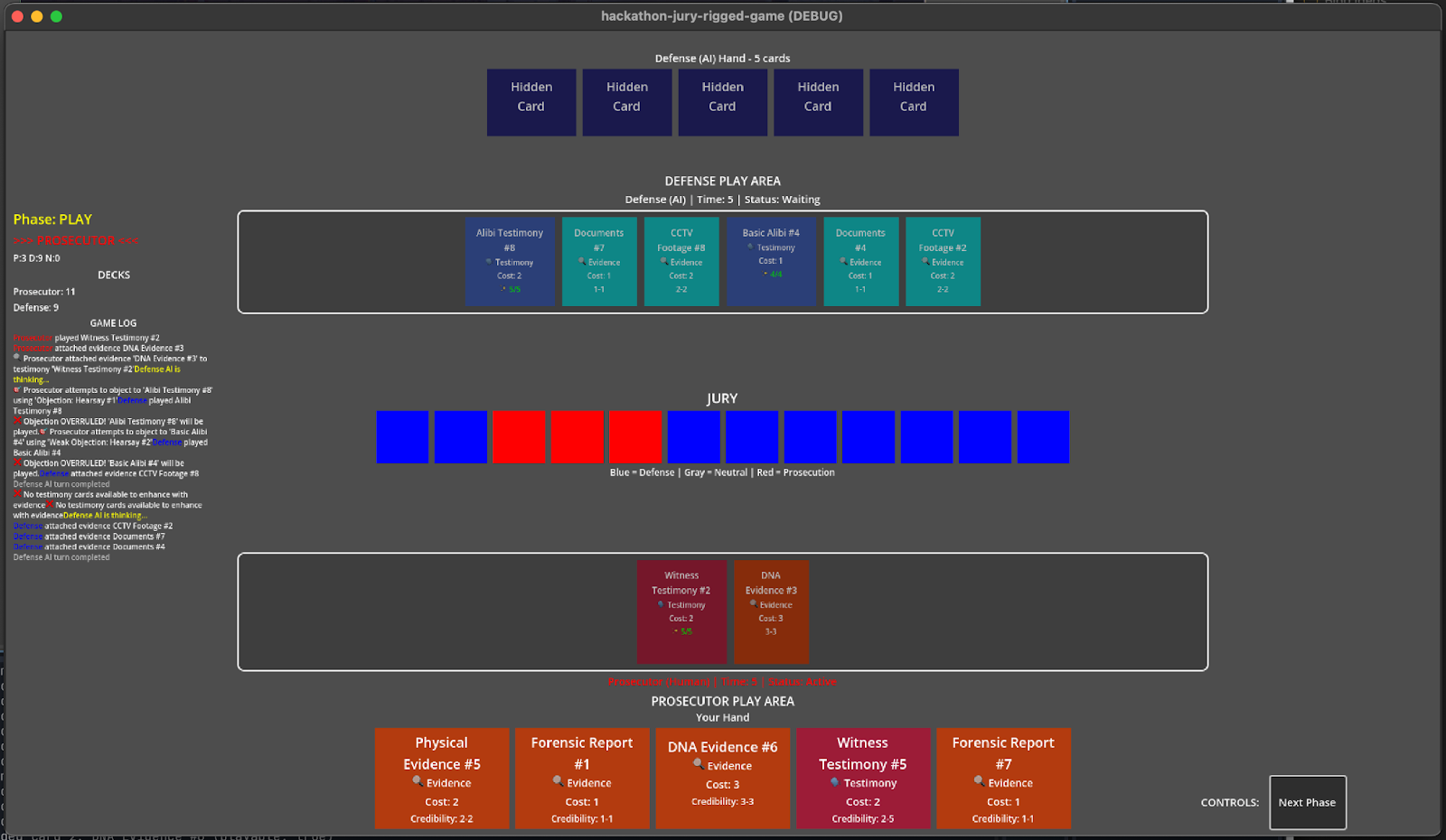

Since Clio makes software for lawyers, a courtroom theme felt like the obvious move. To be honest, my “trial” is more Law & Order rerun than actual legal drama.

So here’s what I did. I grabbed Cursor IDE’s new custom modes. They are still in beta, but promising. I set out to build a two-player courtroom card game in Godot. I had never touched Godot before. What could go wrong? (Spoiler: a lot, but that’s half the fun.)

Why custom modes made this experiment possible

The hackathon theme was simple. Build anything you want, but you have to use AI tools. Cursor IDE is already built around AI, but its default agent and ask modes are pretty general. They can answer questions or help with code, but when you are trying to build something with a lot of moving parts, you need more than just a helpful assistant.

Before custom modes, I had been trying to do something similar by keeping persona prompts in markdown files and using snippets in Alfred 5 to insert instructions. This kind of worked, but it was clunky. It did not let me set strict tool permissions or pick the default AI model for each persona, which made things messy and less secure.

That is where custom modes come in. With the beta feature, you can create specialized agents for different roles. I wanted to see if having a Product Owner, Architect, Project Manager, Developer, Code Reviewer, and Technical Writer would make the process smoother. Each one could focus on a specific part of the project, just like a real team.

My hope was that these custom modes would let me keep context and instructions clear, so the AI would not get lost or confused as the project grew. I figured if I could get the agents to work together, maybe I could build something more complex than a simple demo. This was my chance to see if custom modes could actually help manage a real, multi-phase project, not just answer one-off questions.

Building a game with Cursor, Godot, and a team of AI agents

I set up the project with Cursor’s custom modes to mirror a software development team. Each mode had its own job, model, and tool permissions. Here’s how I built each one:

Product Owner

I used the PRD playbooks.com sample mode without modifications. The model was Claude 4 Sonnet (thinking). Tools enabled: search codebase, search web, read file, edit and reapply (for the PRD file). I gave it high-level requirements, answered its questions, and it developed the initial PRD for the game.

Architect

This mode was based on the Architect playbooks.com sample mode. The model was o3. Tools enabled: search codebase, search web, grep, search files, list directory, read file, fetch rules, terminal, edit and reapply (for the design doc file). I added the Godot docs to Cursor’s indexed docs and had the Architect review the PRD and generate a system design doc.

Project Manager

I used the Plan playbooks.com sample mode. The model was GPT-4.1. Tools enabled: search codebase, read file, edit and reapply (for the plan file). The Project Manager reviewed the PRD and design doc, then created the implementation plan.

Godot Developer

This mode was custom. I used Gemini to help generate the prompt, following the format of the other playbooks.com examples. The model was Claude 4 Sonnet (thinking), with all tools enabled. I provided the PRD, design, and plan, and the Developer started implementing features. Using AI to help write prompts for other AI is surprisingly effective, since it can spot gaps or ambiguities in your instructions and suggest ways to make them clearer or more actionable.

Code Reviewer

I created this mode with help from Gemini for formatting and scope. The model was o3. Tools enabled: search codebase, search web, grep, search files, list directory, read file, fetch rules, terminal. At first, I forgot to give the Code Reviewer terminal access, and I was confused about why it kept trying to use git diff and failing. Once I fixed the permissions, it worked as expected. I also had to add scope guards so it would focus on PRs and diffs, not the full files.

Technical Writer

This mode was based on the Content Writer playbooks.com sample mode. The model was GPT-4.1. Tools enabled: search codebase, search web, read file, edit and reapply (for the output files). It generated technical docs and the user manual.

After the initial planning, I mostly stopped using the Product Owner and Architect due to time constraints. If I had more time, I would have kept them involved for milestone planning and design reviews. The dev loops used the Project Manager for planning, the Developer for executing, and the Code Reviewer for reviewing and identifying issues. The Developer AI sometimes struggled with editing files, occasionally trying wild solutions like deleting the whole file or scripting a one-line change. And let’s be honest, the game’s visuals are… functional.

Next time, I’m adding a graphic designer agent to the team.

Debugging, dead ends, and what did I just break?

Every project hits a few walls, and this one was no different. Honestly, the learning curve for Godot itself, at least for a basic UI like this, wasn’t that bad. The real issue was that since the Developer agent was doing almost everything, I wasn’t actually learning much about Godot as we moved along. If I had tweaked the agents to be more didactic, I probably would have picked up more about the engine. That’s something I’d definitely do for future projects, especially when working on unfamiliar code.

Cursor’s custom mode UI didn’t help either. The editor for prompts and settings is tiny and kind of annoying to use, especially when you want to tweak a lot of details or copy in longer instructions.

Agent confusion was another big hurdle. I tried to stick to debugging and implementing everything through prompts, but sometimes the AI just couldn’t get it right. The UI was the biggest pain point. Since I didn’t provide any visual designs, the Developer agent kept misunderstanding what I wanted. I’d ask for a simple layout change, and it would either ignore the request or break something else in the process. More than once, I had to step in and fix things manually.

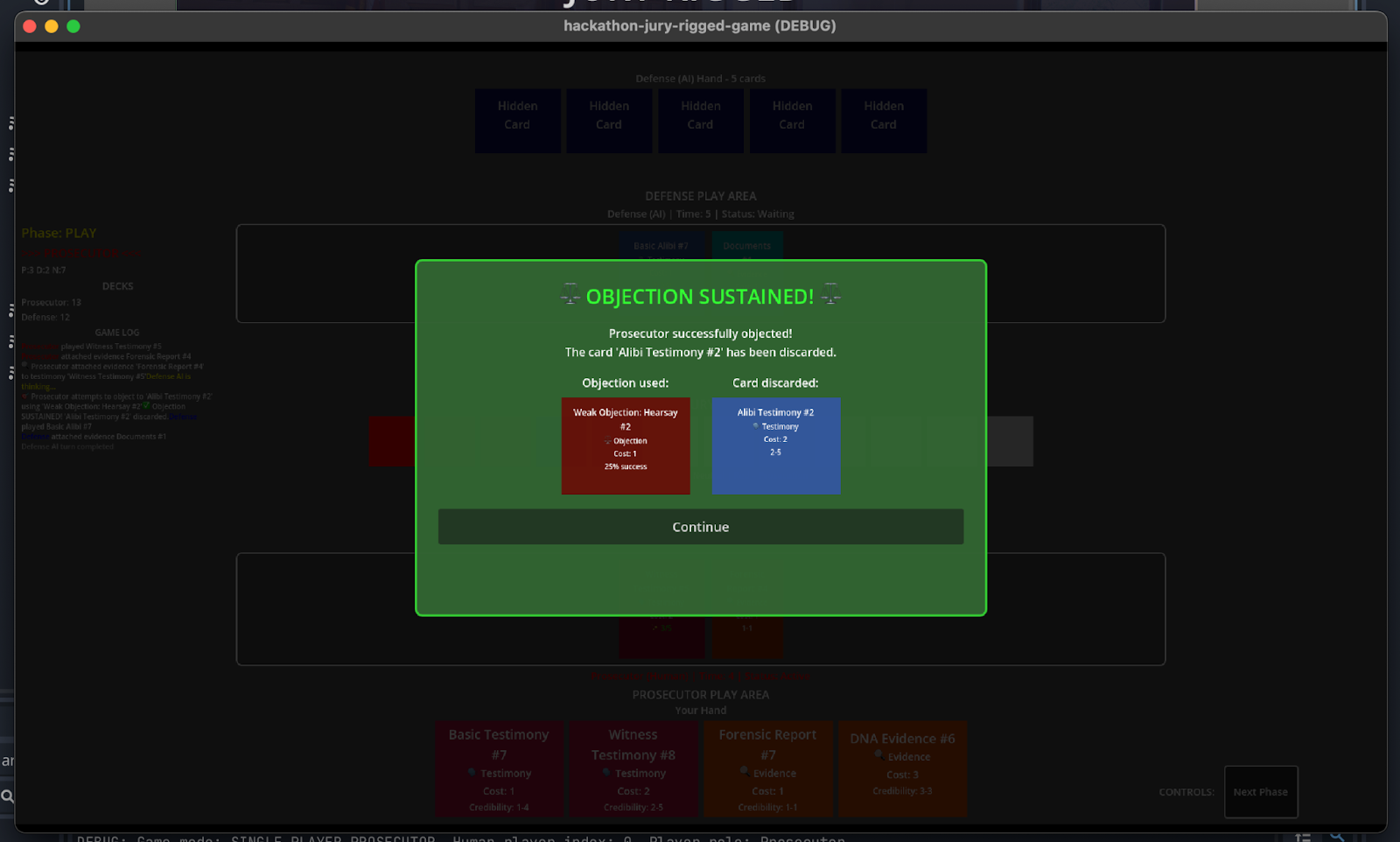

There were some classic “what did I just break?” moments. For example, one time a small change to the card effect logic ended up breaking the entire turn system. The Code Reviewer caught it, but only after a few failed test runs. Another time, the Developer tried to refactor a UI scene and ended up deleting half the buttons. Debugging these issues was a mix of AI suggestions and old-fashioned trial and error.

Lessons, surprises, and what I’d do differently

Here’s what stood out:

What worked:

- Detailed, focused instructions made a big difference

- Specialized agent personas kept things organized (at least early on)

- Iterative planning with the Project Manager kept things moving

What didn’t:

- The Developer agent sometimes overengineered or got lost in the weeds

- Plan files got huge and hard to manage

- I missed out on learning Godot since the AI did most of the work

- No visual input made UI work tough for the Developer

- Product Owner and Architect were underused after the start

- Tech Writer’s output was underwhelming

Unexpected wins:

- The code review loop worked better than expected

- The Code Reviewer caught missing precondition checks and logic bugs

The user guide the Tech Writer produced was basic, but it did cover the essentials:

“Spend all your time points. Unused time does not carry. Weak objections are cheap but miss often. Save strong ones for clutch plays. Evidence is best on high base credibility testimony.”

If I do this again, I’ll make the agents more didactic, keep planning and design roles active, and maybe get the AI to explain its reasoning as it works. And yes, I’ll bring in a graphic designer agent next time.

Final thoughts on building with AI agents

Looking back, this project was a real test of what AI coding tools and custom modes can do. I learned a lot about how to set up workflows, where the AI shines, and where it still needs a human touch. Custom modes made it possible to organize the work and keep things moving, even if some roles ended up underused. The process was far from perfect, but it showed that with the right setup, you can get a lot done—even if you’re not an expert in every tool or language involved. There’s still plenty of room for improvement, but it was a solid experiment in pushing what’s possible with AI-assisted development.

Ready to Transform Your Career?

Let's work together to unlock your potential and achieve your professional goals.